Haowei Lin

E-mail: linhaowei (at) pku (dot) edu (dot) cn

I am Haowei Lin, a third year Ph.D. student at the Institute for Artificial Intelligence, Peking University, co-advised by Prof. Yitao Liang, Prof. Di He, and Prof. Jianzhu Ma.

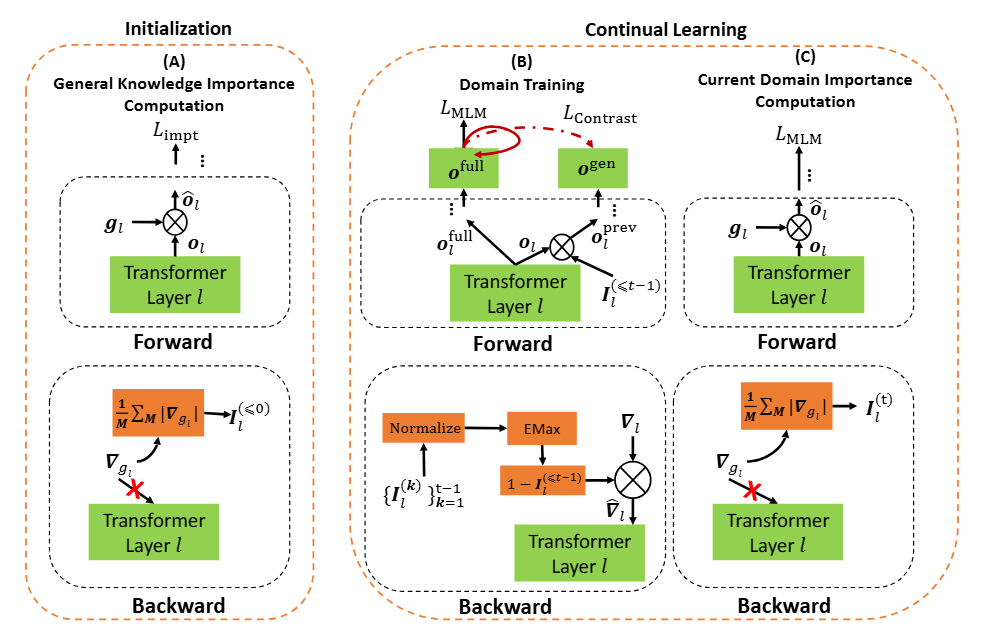

I received my Bachelor’s degree in Artificial Intelligence from Yuanpei College, Peking University, where I was fortunate to work with Prof. Bing Liu and Dr. Zixuan Ke on OOD detection, continual learning, and language models. We are the first to propose the task of continual pre-training for LLMs (EMNLP22, ICLR23), and the first to apply OOD detection methods to continual learning (EMNLP23, ICLR24).

I work on unified cross-modal generative foundation models (GFMs) for scientific discovery. My current research focuses on:

- Foundations and unification of GFMs. Creating useful experiences that apply to both diffusion models (including flow-matching) and autoregressive architectures, and studying their scaling laws and training-free guidance (TFG).

- Cross-modal generative modeling. Building models that operate across language, video, MDPs, and 3D/molecular data.

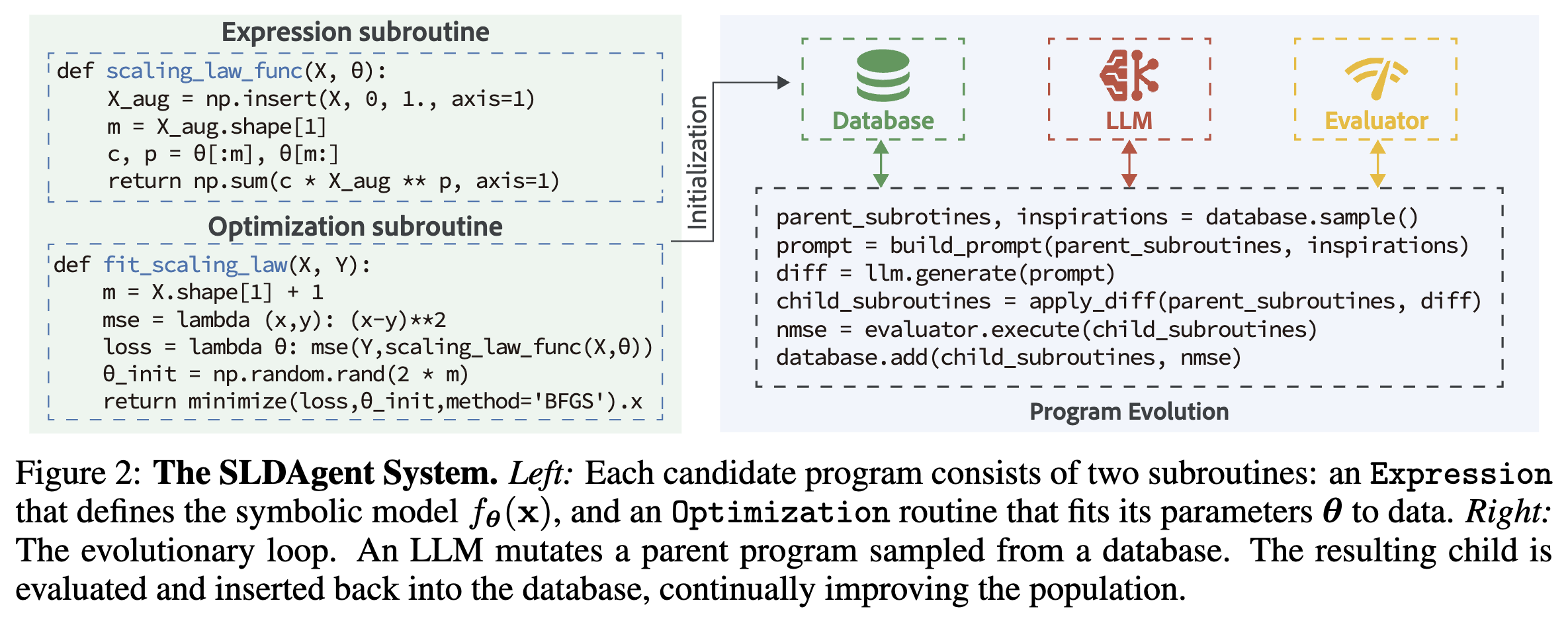

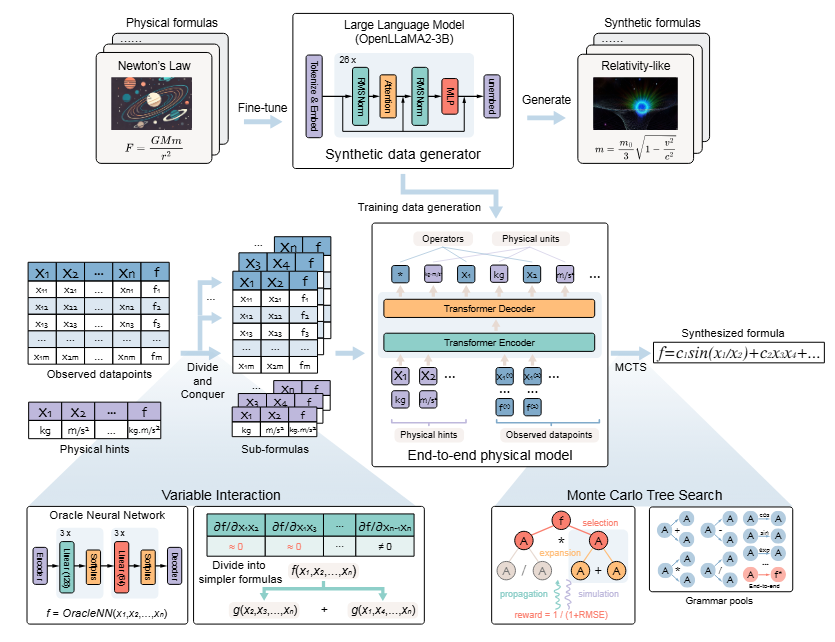

- AI for scientific discovery. Applying and post-training GFMs for complex reasoning, open-world gaming, and discovering new laws in space physics and neural network scaling.

Outside of my professional interests, I enjoy engaging in music-related activities, including singing and playing the guitar.

If you’re interested in working with me on GFMs / AI Scientist, please contact me through e-mail.

news

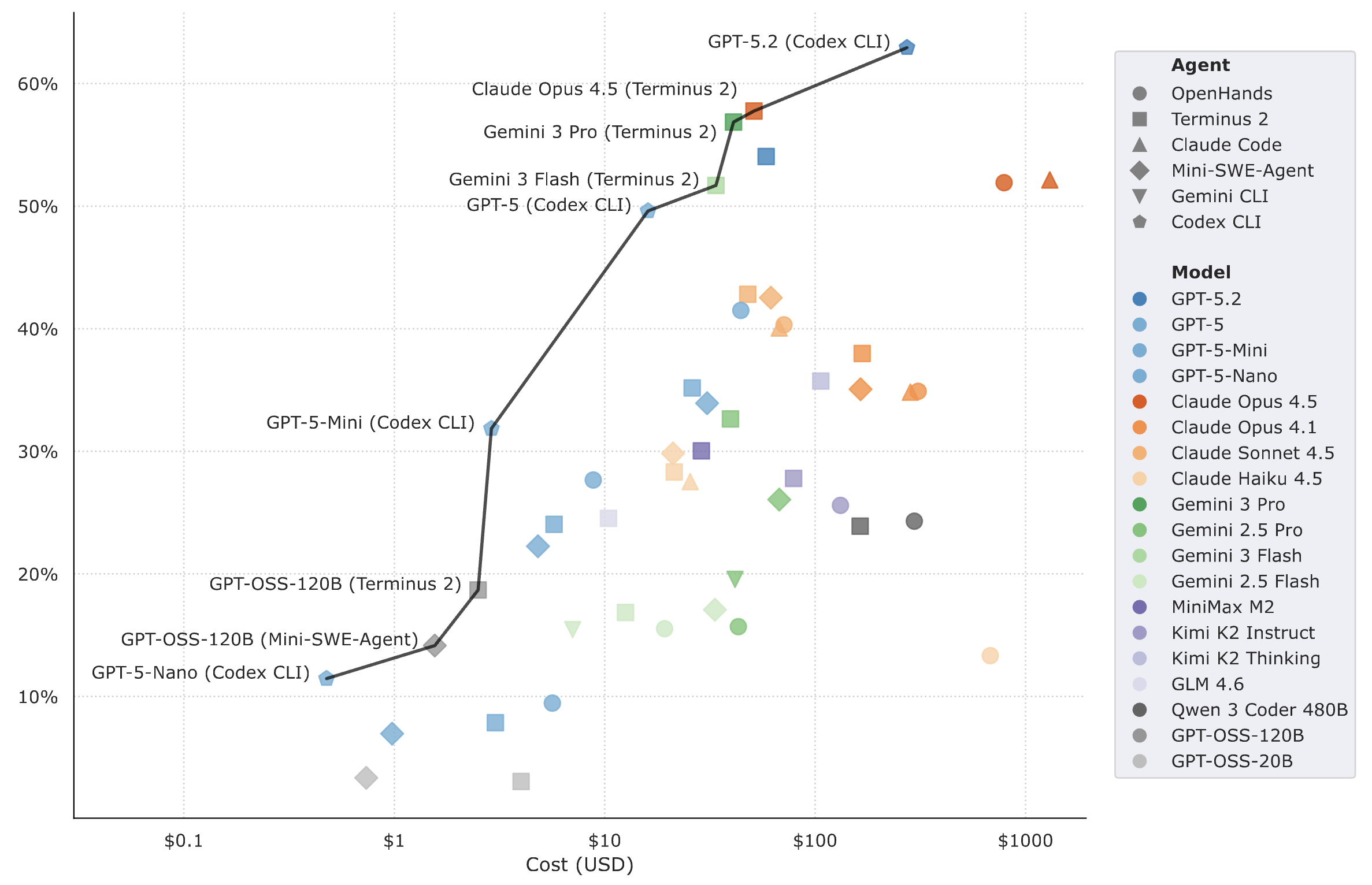

| Dec 07, 2025 | Glad to launch a new blog on Scaling Law Discovery (SLD) (paper). We hope our work on SLD helps advance foundation model development and push the boundaries of AI Scientist. Code, dataset, benchmarks, and leaderboard are all publicly available. |

|---|---|

| Oct 21, 2025 | Excited to be a core contributor of adapters in Terminal-Bench, which converts all agentic benchmarks (e.g., SWE-related) in a unified format to t-bench! Happy to see OAI, GDM, Anthropic, DeepSeek, etc. using T-Bench for model evaluation in their model release. |

| Oct 20, 2025 | Our paper on AI for scientific discovery was published in Nature Machine Intelligence as a cover paper! |