Haowei Lin

E-mail: linhaowei (at) pku (dot) edu (dot) cn

I am Haowei Lin, a third year Ph.D. student at the Institute for Artificial Intelligence, Peking University, co-advised by Prof. Yitao Liang, Prof. Di He, and Prof. Jianzhu Ma.

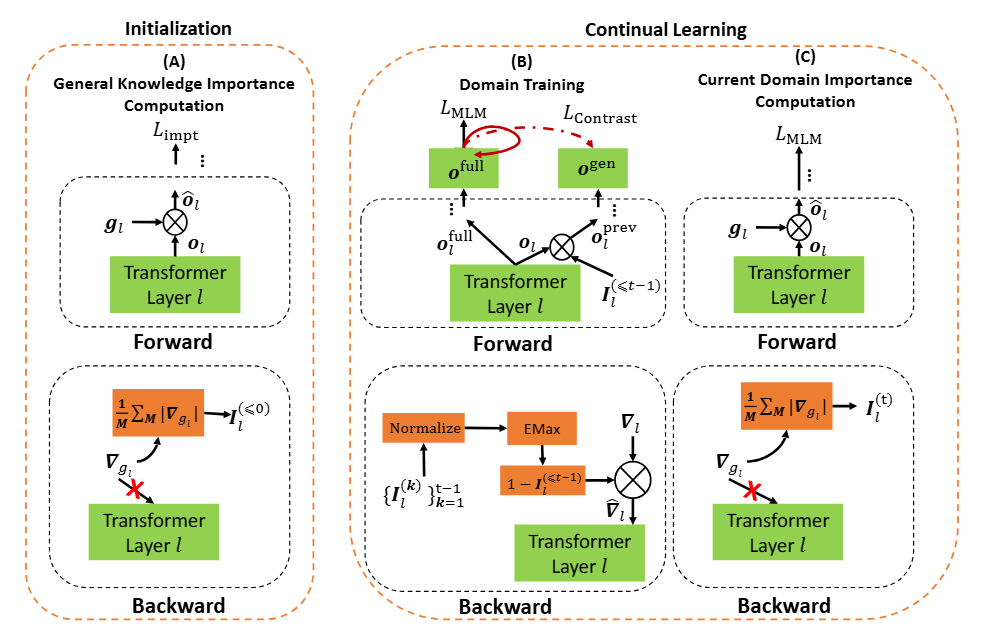

I received my Bachelor’s degree in Artificial Intelligence from Yuanpei College, Peking University. During my undergraduate studies, I was fortunate to work with Prof. Bing Liu and Dr. Zixuan Ke on OOD detection, continual learning, and language models. We are the first to propose the task of continual pre-training for LLMs (EMNLP22, ICLR23), and the first to apply OOD detection methods to continual learning (EMNLP23, ICLR24).

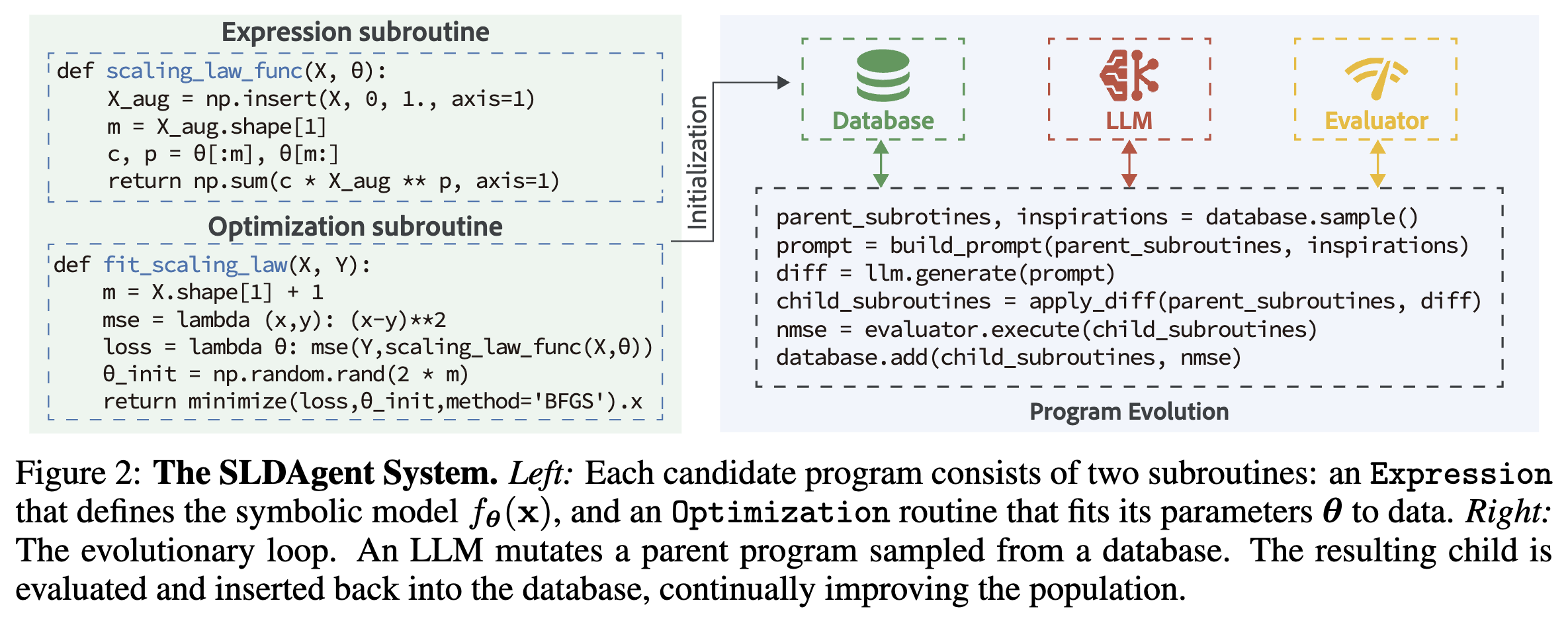

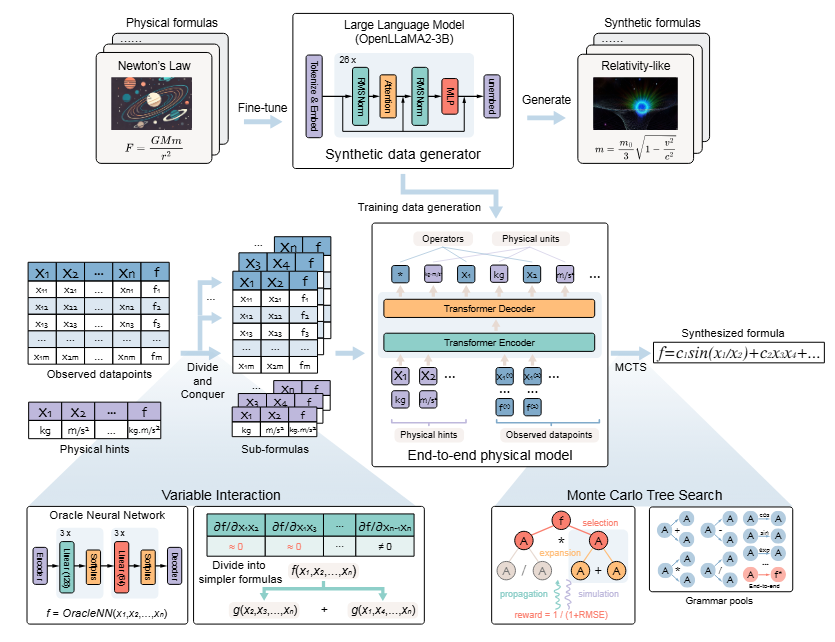

My Ph.D. research focuses on AI for Science, spanning two complementary directions. On one hand, I study generative foundation models across modalities such as text, video, MDPs, 3D, and molecules. In collaboration with Prof. Stefano Ermon and Prof. Yilun Du, we conducted a series of studies on inference-time scaling for non-autoregressive models (the TFG series: NeurIPS24, ICLR25, ICLR26). On the other hand, I am interested in building AI Scientists and treating AI research itself as a new axis for AI4Science. As an initial step, we studied Scaling Laws Discovery (ICML24, ICLR26) to unveil the physics of AI.

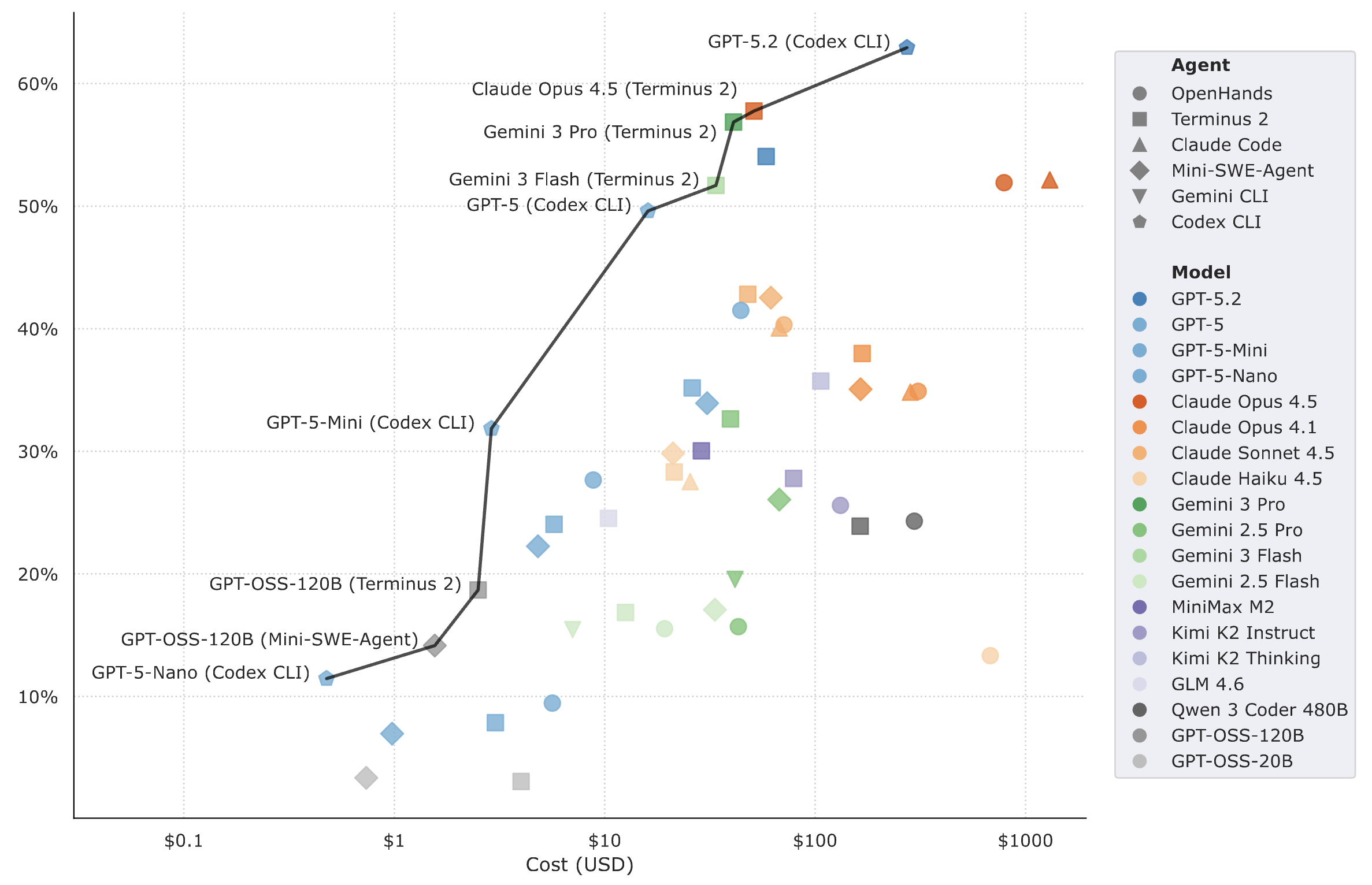

Beyond research, I enjoy contributing to open source. I am a core developer of Harbor Adapters, a subproject of Terminal Bench, an evaluation harness widely used in modern model releases (e.g., GPT, Gemini, Claude).

If you’re interested in collaborating on generative foundation models or AI Scientist research, feel free to reach out via email.

news

| Dec 07, 2025 | Glad to launch a new blog on Scaling Law Discovery (SLD) (paper). We hope our work on SLD helps advance foundation model development and push the boundaries of AI Scientist. Code, dataset, benchmarks, and leaderboard are all publicly available. |

|---|---|

| Oct 21, 2025 | Excited to be a core contributor of adapters in Terminal-Bench, which converts all agentic benchmarks (e.g., SWE-related) in a unified format to t-bench! Happy to see OAI, GDM, Anthropic, DeepSeek, etc. using T-Bench for model evaluation in their model release. |

| Oct 20, 2025 | Our paper on AI for scientific discovery was published in Nature Machine Intelligence as a cover paper! |